Project at a glance

The retrieval and marketability of visual content much depends on the availability of high-quality image metadata which enables customers to efficiently locate relevant content in large image collections.

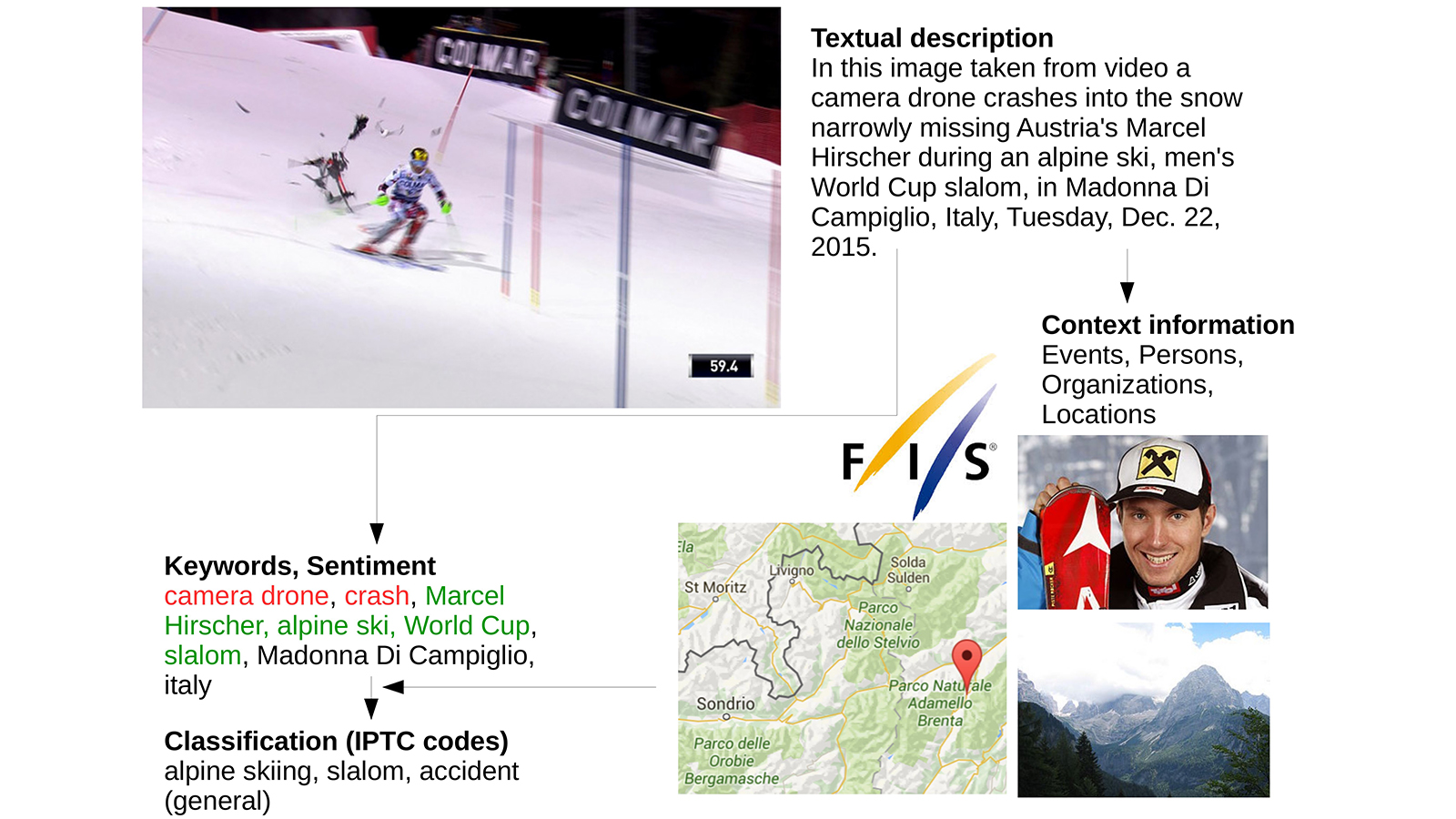

The IMAGINE project develops advanced information extraction methods which exploit the convergence between textual image descriptions and image content as well as linked open data to automatically obtain relevant metadata such as keywords, named entities and topics.

Project

IMAGINE: Cross-modal information extraction for improved image meta dataTeam

Kuntschik Philipp More about Kuntschik PhilippFunding

Keystone AG, Innosuisse (Kommission für Technologie und Innovation KTI)Duration

November 2015 – April 2017

Starting situation

The discoverability and marketability of visual contents – such as photographs, graphics, and videos – is largely dependent on the availability of high-quality metadata that enable customers to efficiently locate relevant content in extensive collections. The corresponding keywording is often carried out manually in practice and is therefore costly and only economically feasible for a small proportion of the available contents. To increase the probability of images being displayed (and therefore purchased) during searches, quality control – especially for visual content from third parties – is highly important, as this content frequently contains non-relevant keywords.

Project goal

Within the scope of the research project, technologies will be developed that enable the automatic extraction of information (metadata) from image descriptions and thereby aim to eliminate the aforementioned restrictions. Furthermore, it will become possible to automatically link images with external content and information sources, e.g. Wikipedia and Google Maps. The enclosed graphic presents a detailed depiction of the respective procedure: based on the image description, events (FIS – Alpine Skiing World Cup), persons (Marcel Hirscher), and places (Madonna Di Campiglio), as well as associated images and maps are identified and keywords (camera drone, crash, slalom, etc.) extracted in a fully automated way. These can then be linked with background information on Google Maps, Wikipedia, etc. to optimise the image search or to group photos and illustrations by topics, persons, places, or events.

Status

- 14 February 2018 – the article “Bilder finden statt suchen - Künstliche Intelligenz ermittelt Metadaten und Bildcluster” has been published in the PICTA magazine 1/2018.

- 12 July 2017 – the Bündner Tagblatt has published the article “Intelligente Bildsuche” that covers the IMAGINE project.

- 23 June 2017 – Karl Csoknyay has presented the IMAGINE project on the IPTC Photo Metadata Conference 2017.

- 14 June 2017 – barfi.ch introduced the IMAGINE project in its article “Bessere Bildsuche dank künstlicher Intelligenz”.

- 8 May 2017 – final project meeting in Chur and successful conclusion of the IMAGINE project.

- 17 April 2017 – the IMAGINE API has been successfully tested and evaluated with a test corpus of more than 100 000 images.

- 28 March 2017 – we have significantly improved the reliability of the named entity linking system used in the IMAGINE project.

- 19 January 2017 – IMAGINE project meeting in Zurich.

- 31 October 2016 – we have finalised a first working version of the IMAGINE API.

- 31 July 2016 – the second project report has been submitted to the CTI.

- 11 July 2016 – the initial version of IMAGINE’s keyword clustering and data enrichment components has been finalised.

- 29 June 2016 – the paper “Aspect-Based Extraction and Analysis of Affective Knowledge from Social Media Streams” has been accepted for publication in the IEEE Intelligent Systems journal.

- 22 June 2016 – the article “Detection of Valid Sentiment-Target Pairs in Online Product Reviews and News Media Coverage” has been accepted at the 2016 IEEE/WIC/ACM International Conference on Web Intelligence (WI’2016).

- 24 May 2016 – the paper “A Regional News Corpora for Contextualized Entity Discovery and Linking” which describes one of the evaluation corpora for the IMAGINE project has been presented at the Language Resources and Evaluation Conference (LREC 2016).

- 19 April 2016 – IMAGINE’s advanced keyword extraction algorithm which is suitable for non-standard domains such as creative pictures has been implemented.

- 1 April 2016 – the project consortium has met in Zurich to outline the next steps for the initial prototype to be developed by 30 September 2016.

- 29 February 2016 – an improved version of the Jeremia text pre-processing component (language detection, sentence splitting, tokenisation, part-of-speech tagging, dependency parsing) used within the IMAGINE project has been released.

- 15 February 2016 – a mock-up version of the IMAGINE REST API has been release for testing.

- 31 January 2016 – we have submitted the first project report to the Commission for Technology and Innovation (CTI).

- 26 January 2016 – the article “A Regional News Corpora for Contextualized Entity Discovery and Linking” that evaluates the IMAGINE named entity linking component has been accepted for publication at the tenth edition of the Language Resources and Evaluation Conference (LREC 2016).

- 5 January 2016 – completion of the specification of the IMAGINE REST API

- 5 November 2015 – IMAGINE project-opening meeting in Zurich.

Implementation

The IMAGINE project is developing advanced methods for automatically extracting metadata from image descriptions and visual content as well as techniques for enriching these with links to persons, organisations, and places. Additionally, the research project will develop ontologies for classifying images and graphics, aiming to improve the discoverability of images/graphics and implement techniques for automatically assigning them to different categories. This makes it possible to filter images based on categories, for example. Images are classified in a fully automated way using procedures from the field of artificial intelligence.

Results

Apart from a clearly improved image search, it will also be possible to automatically group images according to subjects. The links between visual content and background information from sources, such as Wikipedia or the Internet Movie Database, in turn form the foundation for complex searches.

Users of KEYSTONE products therefore gain the advantage of a user-friendly image search that delivers better results and makes it possible to group images according to criteria that are relevant to the customer. The industrial partners benefit from increased customer satisfaction and lower costs in capturing images, since manual working steps are enhanced by intelligent algorithms. Furthermore, IMAGINE allows for the low-cost capturing of images provided by third-party providers, which will also lead to a considerable increase in their metadata quality.

Besides the current staff of the University of Applied Sciences of the Grisons listed above, the following former project staff and external people were involved in the project:

- Jost Renggli (Venture Valuation, COO & Partner)

- David Caulfield

- Alexandra Dienser

- Patrik Frei

- Aitana Peire

- Markus Läng

- Karl Csoknyay

- Lukas Kobel

- Rütti Sven

- Fabian Odoni

Parties involved

The IMAGINE project is funded by the Swiss Federal Department of Economic Affairs (FDEA) Innosuisse (Commission for Technology and Innovation CTI).

The project partners include the university of applied sciences University of Applied Sciences of the Grisons (Swiss Institute for Information Research SII), and KEYSTONE AG Zurich, the Swiss provider with the most comprehensive offer of visual content – photography, graphics, video.